This blog explains how to build a Human Activity Classifier with Azure Machine Learning. This classifier predicts somebody’s activity class based on the use of wearable sensors. The complete experiment can be downloaded from the Azure Machine Learning Gallery.

Description of the Human Activity Classifier

This classifier predicts somebody’s activity class (sitting, standing up, standing, sitting down, walking). It is based on the Human Activity Recognition dataset. Human Activity Recognition (HAR) is an active research area, results of which have the potential to benefit the development of assistive technologies in order to support care of the elderly, the chronically ill and people with special needs. Activity recognition can be used to provide information about patients’ routines to support the development of e-health systems. Two approaches are commonly used for HAR: image processing and use of wearable sensors. In this case we will use information generated by wearable sensors (Ugulino et al, 2012).

Data Processing and Analysis

This experiment demonstrates how to use some of the basic data processing modules (Project Columns, Metadata Editor) as well as a module used for computing basic statistics on a dataset (Descriptive Statistics). Besides we will use the Execute R module to visualize the correlations. We will test several models (Multiclass Decision Jungle, Multiclass Decision Forest, and Multiclass Neural Network). The results will be compared using an Execute R module.

In this sample we use the Human Activity Recognition Data from its source: http://groupware.les.inf.puc-rio.br/har#ixzz2PyRdbAfA. More info can also be found on the UCI repository. Although the UCI repository states that there are no missing values, we had to delete 1 row containing invalid values. After solving this, we uploaded the file to the Azure Machine Learning environment.

The data has been collected during 8 hours of activities, 2 hours with each of the 2 men and 2 women, all adults and healthy. These people were wearing 4 accelerometers from LiliPad Arduino, respectively positioned in the waist, left thigh, right ankle, and right arm. This resulted in a dataset with 165632 rows and 19 columns.

- user (text)

- gender (text)

- age (integer)

- how_tall_in_meters (real)

- weight (int)

- body_mass_index (real)

- x1 (type int, contains the read value of the axis ‘x’ of the 1st accelerometer, mounted on waist)

- y1 (type int, contains the read value of the axis ‘y’ of the 1st accelerometer, mounted on waist)

- z1 (type int, contains the read value of the axis ‘z’ of the 1st accelerometer, mounted on waist)

- x2 (type int, contains the read value of the axis ‘x’ of the 2nd accelerometer, mounted on the left thigh)

- y2 (type int, contains the read value of the axis ‘y’ of the 2nd accelerometer, mounted on the left thigh)

- z2 (type int, contains the read value of the axis ‘z’ of the 2nd accelerometer, mounted on the left thigh)

- x3 (type int, contains the read value of the axis ‘x’ of the 3rd accelerometer, mounted on the right ankle)

- y3 (type int, contains the read value of the axis ‘y’ of the 3rd accelerometer, mounted on the right ankle)

- z3 (type int, contains the read value of the axis ‘z’ of the 3rd accelerometer, mounted on the right ankle)

- x4 (type int, contains the read value of the axis ‘x’ of the 4th accelerometer, mounted on the right upper-arm)

- y4 (type int, contains the read value of the axis ‘y’ of the 4th accelerometer, mounted on the right upper-arm)

- z4 (type int, contains the read value of the axis ‘z’ of the 4th accelerometer, mounted on the right upper-arm)

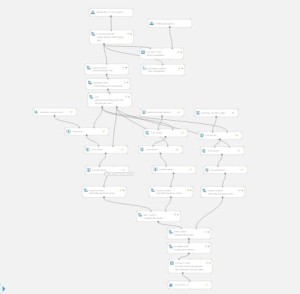

The following diagram shows the entire experiment (as the experiment is too big to fit on the screen, it would be better to download the sample and view it in Azure ML studio):

First, we take care of possible missing data using the module Clean Missing Data, deleting all rows with missing data if required. Although this dataset doesn’t have missing data, it is recommendable to check your incoming data when you create a webservice out of your model.

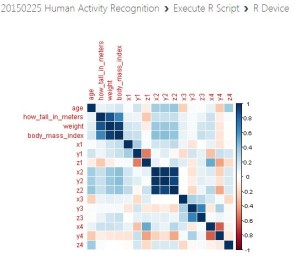

We added an instance of the Descriptive Statistics module to produce some basic statistics. Besides we checked the inter-item correlation (excluding gender and class as they are categorical) using the Execute R option.

Observe that there is a strong correlation between length (how_tall_in_meters), weight (weight) and b.m.i. (body_mass_index). This is not surprising as b.m.i is calculated based on length and weight. We will therefore exclude body_mass_index using the Project Columns module. Here we also exclude user. Thereafter, we use the Metadata Editor module specify gender to be a categorical variable.

We split the dataset in a training (70%) and a test (30%) set.

To find the best-fitting model, we will test 3 different models.

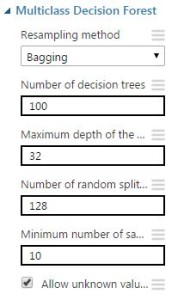

Multiclass Decision Forest model settings

Multiclass Neural Network model settings

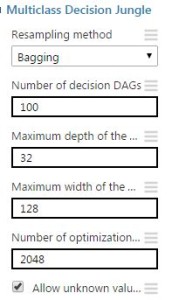

Multiclass Decision Jungle model settings

Results

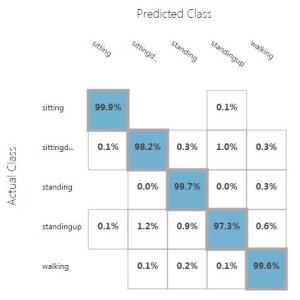

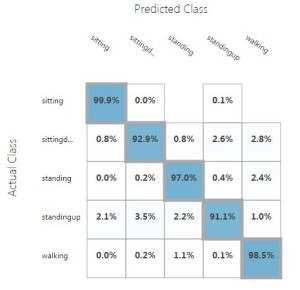

First, we check the results from the 3 different models, regarding overal accuracy and precision per class.

| Measures | Model 1 | Model 2 | Model 3 |

| Overall accuracy | 0.9943 | 0.9738 | 0.9851 |

| Average accuracy | 0.9977 | 0.9895 | 0.9940 |

| Micro-averaged precision | 0.9943 | 0.9738 | 0.9851 |

| Macro-averaged precision | 0.9910 | 0.9645 | 0.9784 |

| Micro-averaged recall | 0.9943 | 0.9738 | 0.9851 |

| Macro-averaged recall | 0.9893 | 0.9610 | 0.9706 |

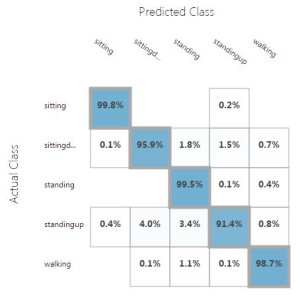

We compared the results, using an Execute R module to combine the results. Model 1, a Multiclass Decision Forest model, gave the best results. We observe that the highest precision was obtained to predict whether somebody was sitting, standing, or walking. However, predicting whether somebody is standing up or sitting down had a little lower precision, albeit still very high.

| classes | precision model 1 | precision model 2 | precision model 3 |

| Sitting | 0.9996 | 0.9926 | 0.9987 |

| Sitting Down | 0.9826 | 0.9463 | 0.9536 |

| Standing | 0.9945 | 0.9817 | 0.9766 |

| Standing Up | 0.9837 | 0.9506 | 0.9722 |

| Walking | 0.9944 | 0.9638 | 0.9911 |

Sources

Data

dataset: http://groupware.les.inf.puc-rio.br/har#dataset

information about data: https://archive.ics.uci.edu/ml/datasets/Wearable+Computing%3A+Classification+of+Body+Postures+and+Movements+(PUC-Rio)

Paper and Presentation

Ugulino, W.; Cardador, D.; Vega, K.; Velloso, E.; Milidiu, R.; Fuks, H. Wearable Computing: Accelerometers’ Data Classification of Body Postures and Movements. Proceedings of 21st Brazilian Symposium on Artificial Intelligence. Advances in Artificial Intelligence – SBIA 2012. In: Lecture Notes in Computer Science. , pp. 52-61. Curitiba, PR: Springer Berlin / Heidelberg, 2012. ISBN 978-3-642-34458-9. DOI: 10.1007/978-3-642-34459-6_6.

Available at: [Web Link]

Additional information

More about the corrplot package: http://cran.r-project.org/web/packages/corrplot/vignettes/corrplot-intro.html

How to upload an r package to Azure ML: http://blogs.msdn.com/b/benjguin/archive/2014/09/24/how-to-upload-an-r-package-to-azure-machine-learning.aspx